Research papers

Machine learning helps find links between youth well-being and academic success

17 February 2022

In a first-of-its-kind study published in Scientific Reports, machine learning researchers at Gradient Institute, working in partnership with psychology researchers at the Australian National University, have found significant evidence for causal links between students’ self reported well-being and academic outcomes.

AI-LEAP Call for Papers

12 July 2021

AI-LEAP is a new Australia-based conference aiming at exploring Artificial Intelligence (AI) from a multitude of perspectives: Legal, Ethical, Algorithmic and Political (LEAP). It draws broadly on computer science, social sciences, and humanities to provide an exciting intellectual forum for a genuinely interdisciplinary exchange of ideas on what is one of the most pressing issues of our time....

Tutorial: Using harms and benefits to ground practical AI fairness assessments in finance

4 March 2021

Gradient’s Chief Practitioner Lachlan McCalman and Principal Researcher Dan Steinberg presented a tutorial, together with our colleagues from Element AI, at the ACM Fairness Accountability and Transparency Conference (FAccT 2021) on 4 March 2021. You can watch a video recording of the full tutorial below.

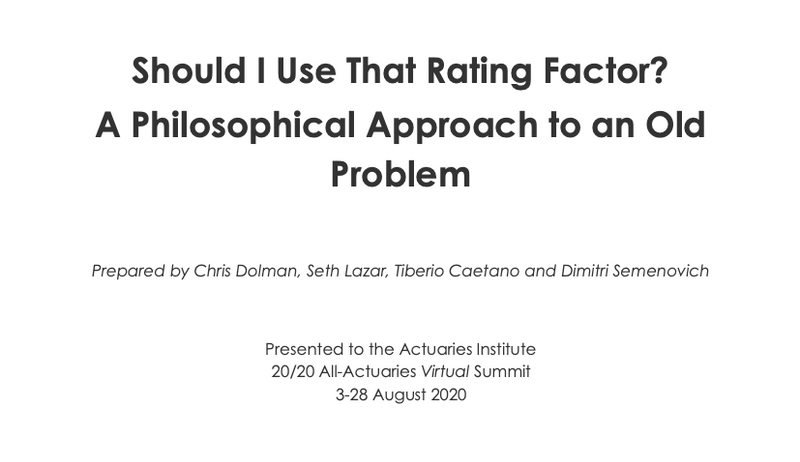

Ethics of insurance pricing

4 August 2020

Gradient Institute Fellows Chris Dolman, Seth Lazar and Dimitri Semenovich, alongside Chief Scientist Tiberio Caetano, have written a paper investigating the question of which data should be used to price insurance policies. The paper argues that even if the use of some “rating factor” is lawful and helps predict risk, there can be legitimate reasons to reject its use. This suggests insurers...

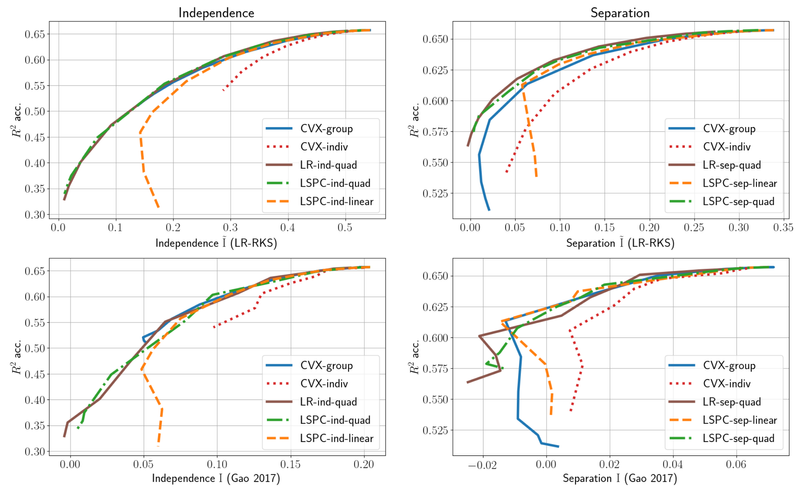

Fast methods for fair regression

25 February 2020

Gradient Institute has written a paper that extends the work we submitted to the 2020 Ethics of Data Science Conference on fair regression in a number of ways. First, the methods introduced in the earlier paper for quantifying the fairness of continuous decisions are benchmarked against “gold standard” (but typically intractable) techniques in order to test their efficacy. The paper also adapts...

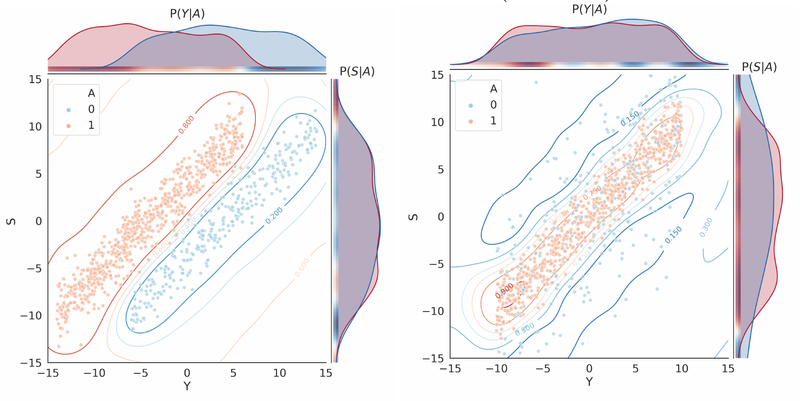

Using probabilistic classification to measure fairness for regression

18 February 2020

Gradient Institute have released a paper (to be presented at the 2020 Ethics of Data Science Conference) studying the problem of how to create quantitative, mathematical representations of fairness that can be incorporated into AI systems to promote fair AI-driven decisions.

Causal inference with Bayes rule

13 December 2019

Finn Lattimore, a Gradient Principal Researcher, has published her work on developing a Bayesian approach to inferring the impact of interventions or actions. The work, done jointly with David Rohde (Criteo AI Lab), shows that representing causality within a standard Bayesian approach softens the boundary between tractable and impossible queries and opens up potential new approaches to causal...